Tutorial: Host a website for a small company on IBM Cloud

Saturday, 19 December 2020

Friday, 18 December 2020

Real-time auditing by Kubernetes with Falco

Securing Kubernetes evolves from a fleeting worry to a first class prerequisite as Kubernetes becomes the platform of choice for both software creation and deployment. This guide covers auditing the operation of Kubernetes clusters in real time and developing a framework to log and manage audit events automatically.

API powered is Kubernetes. In several implementations, the API interacts with each user, administrator, and developer. When a user issues a deployment command created by kubectl, it hits the API and records the request via the audit mechanism of Kubernetes. There are also non-human API interactions to record and inspect, operators run with their own collection of keys explicitly against the API, and cloud vendor external systems touch the API. If out-of-compliance activity is observed, all of these interactions should be logged, audited, and processed with notifications sent out.

Pre-Requirements

1. Account with IBM Cloud. You must first have to create an account with IBM. if you didn't have click this link to signup https://cloud.ibm.com/registration?cm_sp=ibmdev-_-developer-tutorials-_-cloudreg.

2. IBM Cloud Infrastructure Node Kubernetes. Follow this link to enter https://cloud.ibm.com/kubernetes/catalog/cluster?cm_sp=ibmdev-_-developer-tutorials-_-cloudreg

Measures

Step 1 Configure auditing by Kubernetes

Step 2. Establish auditing at Kubernetes

Stage 3. Set up the redirection of events from Falco to LogDNAA

Stage 1. Configure auditing by Kubernetes

Lets go. Any time the Kubernetes API is accessed, Kubernetes can be configured to issue audit events. In order to quickly identify perpetrators of our malicious activity, you can process these incidents with Falco in real time.

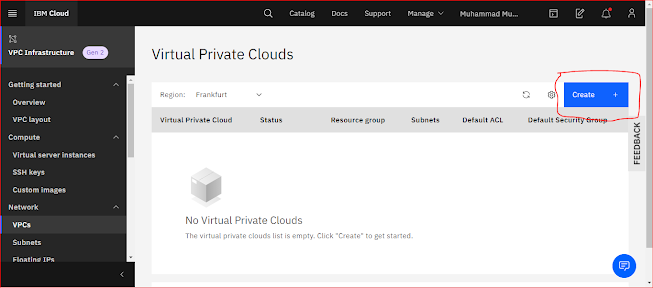

1. Using the IBM Cloud Virtual Private Cloud (VPC) service to build a virtual machine (VM) (Generation 2).

2. Get the floating public IP address of your virtual machine's IP.$ ibmcloud target -r us-east

Switched to region us-east

API endpoint: https://cloud.ibm.com

Region: us-east

User: skrum@us.ibx.com

Account: Cloud Open Sauce (xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx) <-> xxxxxxx

Resource group: No resource group targeted, use 'ibmcloud target -g RESOURCE_GROUP'

CF API endpoint:

Org:

Space:

$ ibmcloud is instances

Listing instances for generation 2 compute in all resource groups and region us-east under account Cloud Open Source as user skrum@us.ibm.com...

ID Name Status Address Floating IP Profile Image

VPC Zone Resource group

xxxx_xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx nibz-falco-dev running 10.241.128.4 52.xxx.xxx.xxx bx2-8x32 ibm-ubuntu-18-04-1-minimal-amd64-2 nibz us-east-3 Defaultssh ${remote_ip} 'sudo apt-get update; sudo apt-get -y install python'2. Set up an inventory list for Ansible, replacing 192.168.0.10 with the server's IP or DNS name..

[cloud]

192.168.0.10 ansible_ssh_user=ubuntu3. Initialize ansible-galaxy.

ansible-galaxy init1.. Get the ansible-sshd Ansible role.

ansible-galaxy install willshersystems.sshd2. Get the ansible-falco Ansible role.

git clone https://github.com/juju4/ansible-falcoCreate a playbook file to use the Ansible role. Note the

hosts:field if you customized anything in the inventory file.--- - hosts: cloud become: yes vars: falco_grpc_unix_enabled: true falco_webserver_enable: true # falco_dev: true sshd: GSSAPIAuthentication: no ChallengeResponseAuthentication: no PasswordAuthentication: no PermitRootLogin: no roles: - role: willshersystems.sshd - role: ansible-falcoRun the Ansible playbook to install Falco.

ansible-playbook -i inventory falco-install.yamlSSH to host to validate the configuration.

ps -ef | grep falco tail -f /var/log/falco/falco.log

The notices that Falco is running should be seen, but nothing is unique to Kubernetes.

The 2nd stage. Establish auditing at Kubernetes

The Kubernetes daemons are managed by the IBM Cloud Kubernetes service for you. Configuring the Kubernetes audit involves configuring the command line arguments of the Kubernetes API server. IBM Cloud offers an audit webhook management system and where it points. In the product documentation, read all about it.

ibmcloud ks cluster master audit-webhook set --cluster <cluster_name> --remote-server http://<server_floating_ip>:8765/k8s_audit

ibmcloud ks cluster master audit-webhook get --cluster <cluster_name>

# refresh (takes a few seconds)

ibmcloud ks cluster master refresh --cluster <cluster_name>

Poke a hole in the firewall or security group for your instance for Kubernetes audit events.

ibmcloud is security-group-rule-add xxxx-xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx inbound tcp --port-min 8765 --port-max 8765 --output JSON { "direction": "inbound", "id": "xxxx-xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx", "ip_version": "ipv4", "port_max": 8765, "port_min": 8765, "protocol": "tcp", "remote": { "cidr_block": "0.0.0.0/0" } }That should be it. Now you can check out your logs!

Edit your rules by modifying the Kubernetes rules under

/etc/falco.View the logs with the following command:

tail -f /var/log/falco/falco.log

Jul 31 21:52:36 nibz-falco-dev falco: 21:52:20.110256128: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-admin target=kube-controller-manager/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/kube-controller-manager?timeout=15s resp=200)

Jul 31 21:52:36 nibz-falco-dev falco: 21:52:22.139158016: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-controller-manager target=kube-scheduler/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/kube-scheduler?timeout=15s resp=200)

Jul 31 21:52:36 nibz-falco-dev falco: 21:52:23.199183104: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-admin target=kube-controller-manager/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/kube-controller-manager?timeout=15s resp=200)

Jul 31 21:52:36 nibz-falco-dev falco: 21:52:25.244869888: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-controller-manager target=kube-scheduler/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/kube-scheduler?timeout=15s resp=200)

Jul 31 21:52:36 nibz-falco-dev falco: 21:52:26.293675008: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-admin target=kube-controller-manager/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/kube-controller-manager?timeout=15s resp=200)

Jul 31 21:52:36 nibz-falco-dev falco: 21:52:28.339374080: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-controller-manager target=kube-scheduler/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/kube-scheduler?timeout=15s resp=200)

Jul 31 21:52:36 nibz-falco-dev falco: 21:52:29.416363008: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-admin target=kube-controller-manager/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/kube-controller-manager?timeout=15s resp=200)

Jul 31 21:52:36 nibz-falco-dev falco: 21:52:31.453209088: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-controller-manager target=kube-scheduler/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/kube-scheduler?timeout=15s resp=200)

Jul 31 21:52:36 nibz-falco-dev falco: 21:52:35.614809088: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-admin target=kube-controller-manager/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/kube-controller-manager?timeout=15s resp=200)

Jul 31 21:53:03 nibz-falco-dev falco: 21:52:36.556668928: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-admin target=cloud-controller-manager/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/cloud-controller-manager resp=200)

Jul 31 21:53:03 nibz-falco-dev falco: 21:52:39.623470080: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-admin target=cloud-controller-manager/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/cloud-controller-manager resp=200)

Jul 31 21:53:03 nibz-falco-dev falco: 21:52:42.690744064: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-admin target=cloud-controller-manager/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/cloud-controller-manager resp=200)

Jul 31 21:53:03 nibz-falco-dev falco: 21:52:45.761656064: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-admin target=cloud-controller-manager/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/cloud-controller-manager resp=200)

Jul 31 21:53:03 nibz-falco-dev falco: 21:52:48.833193984: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-admin target=cloud-controller-manager/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/cloud-controller-manager resp=200)

Jul 31 21:53:03 nibz-falco-dev falco: 21:52:51.915211008: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-admin target=cloud-controller-manager/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/cloud-controller-manager resp=200)

Jul 31 21:53:03 nibz-falco-dev falco: 21:52:55.004199936: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-admin target=cloud-controller-manager/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/cloud-controller-manager resp=200)

Jul 31 21:53:03 nibz-falco-dev falco: 21:52:58.079348992: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-admin target=cloud-controller-manager/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/cloud-controller-manager resp=200)

Jul 31 21:53:03 nibz-falco-dev falco: 21:53:01.160041984: Warning K8s Operation performed by user not in allowed list of users (user=br3dsptd0mfheg0375g0-admin target=cloud-controller-manager/endpoints verb=update uri=/api/v1/namespaces/kube-system/endpoints/cloud-controller-manager resp=200)

Step 3. Set up forwarding of events from Falco into LogDNA

Then at last set up IBM Log Analysis with Log DNA on IBM Cloud. You want at least 30 days of retention for this example. The following screen capture shows how the Logging page within IBM Cloud might look after you provision an instance of the IBM Log Analysis with LogDNA service.

On the Logging tab, press your new log instance to Edit Log Sources. Selecting the Ubuntu/Debian Linux tab is the best way to locate the LogDNA API key and Log Host. Copy information about the key and the host. Notice that the host of the API and the log host vary. For this exercise, you just need the Log Host.

Set up an environment file or export these variables to your shell environment.

# Modify the following URL if your Log host is not in us-south

export LOGDNA_URL="https://logs.us-south.logging.cloud.ibm.com/logs/ingest"

export LOGDNA_KEY="eb250a1fedd547d6ae0a"

Now pull down the falco-logdna exporter script.

git clone https://github.com/falcosecurity/evolution

cp -r evolution/integrations/logdna/ .

virtualenv --python=python3 venv

source venv/bin/activate

pip install -r requirements.txt

In most cases, you must relax permissions on the Falco UNIX socket. You can also use a UNIX group if 777 is too insecure for you.

sudo chmod 777 /var/run/falco.sock

Now you can run the falco-logdna exporter.

python falco-logdna.py --logdna-key ${LOGDNA_KEY} --logdna-url ${LOGDNA_URL}

Return to the Logging page and click View LogDNA to open the LogDNA UI. You should see Kubernetes security events in the LogDNA UI such as the ones in the following screen capture.

Conclusion

1. You've done the following activities in this tutorial:

2. IBM Server Kubernetes Database setup to send audit logs to the service.

3. Set up a VM with correct networking and setup on an IBM Cloud VPC.

4. Set Falco up to accept payloads from Kubernetes for audit.

5. Configured Falco to store certain logs in LogDNA for further review

Maricopa County Supreme Court Clerk: Delivering effective, accurate answers to the public

Maricopa County Supreme Court Clerk: Delivering effective, accurate answers to the public

The Maricopa County Supreme Court Clerk, based in Phoenix, Arizona, gets thousands of calls for service from its 4 million people per day. For time-sensitive requests, people also seek out such as filing for marriage licenses, renewing passports and obtaining court documents. Employees of the Clerk of the Supreme Court became overwhelmed with demands, speeding down already sluggish procedures and adding to frustration among people.

The Clerk of the Superior Court, unlike most other IBM clients, is not in a "competitive industry," but that does not influence their mission. Their aim is to provide their residents public services in the most reliable and cost-effective way possible. To accomplish the purpose, they turned to IBM Watson.

Providing swift and reliable service to residents and employees

IBM Watson Assistant was deployed by the Clerk of the Superior Court to communicate with people and workers, infusing AI into their workflows to provide improved customer support, while minimizing costs and standardizing channel-wide responses. The Clerk's Office first gave their employees Watson Assistant to encourage self-service over Slack, allowing Watson Assistant to address the questions of employees, create support desk tickets for difficult problems and produce important reports.

The Clerk's Office deployed Watson Assistant as a virtual agent for people after the original performance, avoiding lengthy waiting periods on the internet. The Office of the Clerk has a genuinely omnichannel experience, offering Watson Assistant not only on the web through the out-of-the-box web chat of Watson Assistant, but also through SMS, and via voice assistants such as Alexa and Google Assistant, so that people can make appointments from home with easy voice commands.Watson Exploration, IBM's business search feature, was also combined with Watson Assistant by the Clerk's Office to retrieve knowledge from documents for specific policy and operational queries.

The Clerk of the Superior Court knew when it was time to deploy Watson Assistant that they wanted to be smart about their deployment, as the epidemic of COVID-19 was just starting. The team figured that people would only want to talk to humans at this time of confusion, rather than communicate with virtual agents. But the team saw greater public use and satisfaction than they predicted after a soft launch. Because of the shelter-in-place order of the law, the automated representative of the Clerk's Office was crucial in answering questions and having people file paperwork without going personally to the court or sitting for the phone.

Citizens and employees get quick, concise and clear answers with Watson Assistant, and agents can concentrate on their more complicated and rewarding jobs. Watson Assistant conducted about 70% of communications without human interference in only the first month, and agents found that they saved over 100 hours of direct handling of inquiries.

What's next in Maricopa County for IBM Watson and the Clerk of the Supreme Court?One unintended advantage from working with Watson was that a greater knowledge of their people was established by the Clerk of the Court. The Clerk's Office, following the launch of Watson Assistant, obtained insights into the interests of their residents. Based on these experiences, the Office of the Clerk made decisions during COVID-19 on what resources to offer customers remotely and what actions to feature on the website, further strengthening the consumer experience. This is just one of the many advancements we have in mind says Aaron Judy, strategist for technology innovation at the Maricopa County Superior Court Clerk." Watson Assistant for Voice Communications will now be deployed by the Clerk's Office, extending their omnichannel vision to include telephony.

Providing swift and reliable service to residents and employees

How the team of IBM Data Analytics and AI Elite trains companies to cope more effectively with data science

How the team of IBM Data Analytics and AI Elite trains companies to cope more effectively with data science

Today, from last year, last month and even yesterday, the world looks different. In the light of these challenges, the ever-changing state of our world brings new issues and possessing the right abilities becomes crucial for evolving and innovating.

"According to The Quant Crunch report, however, "data science talents are one of the most difficult to recruit for and if not filled, they will theoretically cause the biggest chaos.

This is where we get in. Our team thrives on solving head-on challenges with data science. The IBM Data Science and AI Elite (DSE) partners with companies in all fields, having the best resources to help teams solve data science usage cases and resolve AI adoption challenges.

Rob Thomas, IBM Senior Vice President of Cloud and Data Platform, imagined a team of world-class data scientists at the beginning of 2018 to collaborate with customers and companies to bring data science to use, and that was precisely what the DSE did. Over the past three years and counting, IBM Data Science and AI Elite, with the leadership of approximately 100 data scientists, have completed engagements in 50 countries spanning 6 business sectors. The effect our commitments continue to drive for people globally is resounding, from lowering the total carbon footprint or trash on beaches, to eliminating missing packages or ensuring that your package is properly shipped. We also helped companies increase accuracy by 20 percent in locating missing shipments, process logistics data 423 times quicker, minimize operational costs for telecoms by 15 percent, proactively eliminate bias in the recruiting process of a corporation, and more. Our latest collaboration with Highmark Wellbeing, the Emergent Alliance's Rolls-Royce, and the United Nations Environment Programme (UNEP) are only a couple of the many commitments that make strong use of AI to bring progress.

From a period of 12 months to a few days

Sepsis is not only life-threatening, with 720,000 cases annually in the U.S. and a staggering death rate of between 25 and 50 percent; it doubles as one of the most costly inpatient conditions in the world, costing more than $27 billion annually.Organizations such as Highmark, collaborating with the DSE, have taken enormous strides forward using inpatient clinical evidence to develop models to forecast and avoid mortality from sepsis.

Curren Katz, Director of Data Science, R&D at Highmark Health, shares in a recent project debate, this work usually takes up to 12+ months for Highmark to finish. But the IBM Cloud Pak for Data platform took care of the heavy lifting behind the scenes and allowed the DSE team to return within just a few days with a deployed model."

By making clarification in Highmark's models, Cloud Pak for Data shone as a popular language portal, and also made way for Highmark to rapidly respond to the increasing COVID crisis. As COVID-19 evolves, changes and prompts new data, the newly released portal gives Katz the ability to vacuum up new research findings and contributors.

Moving the economic growth needle

In a similar way, Rolls Royce combined IBM and scores of multinational businesses to create the Emergent Coalition, a non-profit group of technology firms and data science experts that hold the conviction that data and AI would help drive the economic recovery from COVID-19. Recent attempts to work with IBM have centered on addressing the recent declaration of the Emergent Partnership challenge: how a trusted and explainable risk-pulse index could help companies grow stronger after the pandemic.

In order to evaluate a vast variety of global, behavioral and opinion data, IBM and Rolls-Royce R2Data Labs have innovated to create economic resilience, find the pulse of an unprecedented recession and help companies recover stronger from a pandemic.

Digging up data to remove litter on the beach

As global citizens, our decisions, especially in the field of marine litter and beach cleanup activities, contribute to environmental effects. The aim of the United Nations Development Programme (UNDP) was to substantially minimize maritime emissions by 2025, pushing for the establishment of an index for calculating coastal eutrophication and floating plastic density. The DSE has joined forces with the United Nations Environment Programme (UNEP) and the Wilson Center to resolve the globe-wide lack of a consolidated national archive of aquatic litter and data access.

These partners have thrown their heads together to pilot a global platform for aquatic waste, with little mechanism in place to deliver evidence on the volume of plastic polluting beaches today. The IBM Watson Information Catalog on IBM Cloud Pak for Data allowed UNEP and IBM to easily clean, crosswalk, categorize, conform and make the correct data accessible to data scientists automatically.WKC also encourages citizen scientists to track data sources, cooperate with other scientists, submit datasets, and use ranking and labeling systems to share their observations into the dataset, allowing a major jump in removing plastic on beaches for good. Likewise, we worked with the world's first interactive environmental campaigner, Sam, thanks to IBM Watson Assistant, built to unify scholars, populations and politicians to get all stakeholders on board.

Helping businesses to step up time to value

These are only a handful of the many companies that have joined forces with our team to wave data science into great waves. Furthermore, we also see trends emerging between use cases, which drive the team's chance to create business accelerators focused on actual consumer experiences. Industry accelerators will dramatically minimize the time to value on the next data science project by packing up use-case-specific, relevant assets for model rollout.In addition, our team has developed a Remote Data Science Toolkit as a contribution to our society to help data scientists worldwide do their best work from home in the light of recent circumstances triggered by the global pandemic.

With the support of IBM Data Scientists and AI Elite, it is fascinating to look back on the achievements of our clients and organizations around the world. Yet, there is still more work to be done as the demand begins to change. Looking forward the digital revolution will pave the way for the next phase of AI for organizations. I look forward to seeing how the DSE continues to help customers change the world around us, adapt to market trends and put data science to work.

How to operate a WordPress multi-tenant application on IBM Cloud Kubernetes Service

This experience demonstrates the real potential of the Kubernet clusters and shows how we can deploy the world's most common web-based system on top of the world's most popular container orchestration platform. We're offering a complete roadmap for hosting WordPress on the Kubernetes Cluster. Also every component will run in a separate container or container party.

WordPress is a popular web content editing and publishing site. In this tutorial, I'm going to walk you through how to create a highly accessible WordPress (HA) deployment using Kubernetes. WordPress consists of two main components: the PHP server for WordPress and a database for storing user information, messages, and data about the web. We need to make all of these HAs to be fault-tolerant for the entire application. WordPress is a traditional multi-tier app, and each component will have its own container (s). The WordPress containers will be the frontend tier and the MySQL container will be the database/backend tier of WordPress.